Humans, Robots, and a Virus: How COVID-19 Has Changed Our Relationship With Artificial Intelligence

The COVID-19 pandemic disrupted the lives of communities around the world, driving many people to turn to new technologies for social and emotional support. What can artificial intelligence technologies like “Replika” and “the Squirrel” reveal about our own emotional world?

Since the beginning of lockdown restrictions and social distancing in many countries in early 2020, new technologies have increasingly mediated human communication and relationships. Recent scientific studies and news reports have identified the COVID-19 pandemic as an accelerator of technological developments and adoptions in societies around the world. The pandemic seems to have increased peoples’ acceptance of robots in particular, even in Western societies like the United States and Germany, where there had been more resistance to robots compared to countries like Japan.

Autonomous delivery robots, such as the ones produced by Starship Technologies, were first launched before the COVID-19 pandemic began, but they have been increasingly common sightings in certain cities and at selected university campuses since March 2020.

These delivery robots are becoming a solution to the increasing demand for contactless delivery amid the ongoing pandemic and at a time when businesses are facing staffing shortages and attempting to cut costs. Their boxlike shapes do not suggest a living entity, but nonetheless, these delivery robots have been anthropomorphized in many ways, due in part to their autonomous motion. Observations of pedestrians interacting with the delivery robots reveal that people gender them, name them, speak affectionately about them, and, in some cases, abuse them. In fact, when a Starship robot was hit by a car at one university campus, mourners held a vigil for the bot.

Why do people get angry at robots? Why do we develop attachments to robots and mourn their “deaths” if they are destroyed or stop functioning? As AI technologies become more ubiquitous in wealthy societies among the privileged, these questions become more pressing.

A Love-Hate Relationship

Since the beginning of the pandemic, virtual “friend” apps such as Replika have proliferated in an attempt to replace the social networks disrupted by quarantines, remote education, and work. Fifty-one percent of adults in the United States live alone, and loneliness, feelings of isolation, and depression increased during the quarantine mandates in societies around the world.

According to one report, half a million users downloaded the virtual “friend” app Replika in April 2020, and traffic to the app almost doubled. The mental health chatbot Wysa, which uses evidence-based cognitive-behavioral techniques (CBT) and meets clinical safety standards, also reportedly saw a spike in use during the isolation phase of the COVID-19 pandemic.

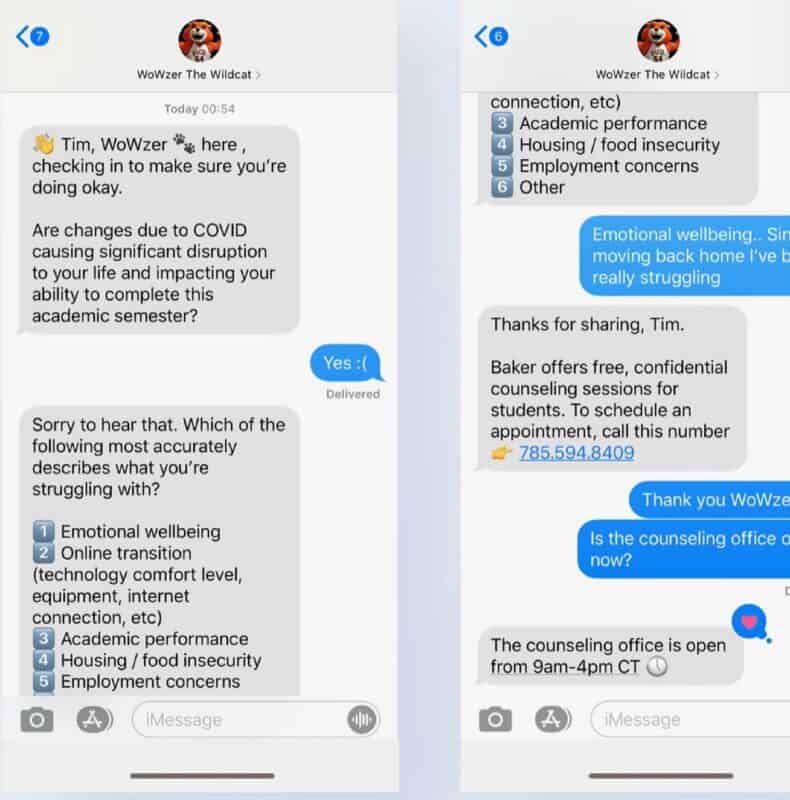

One such AI chatbot that is meant to help with student mental health (and therefore institutional retention) was provided at one liberal arts college in the United States when the pandemic began. Administrators at the college decided to name the bot “the Squirrel” because students seem to have friendly feelings toward the actual small mammals that can be found all over campus.

Squirrels are easily anthropomorphized since frequent exposure to humans has accustomed them to our presence. The chatbot is a natural language processor that students can use as a resource like Apple’s Siri or Amazon’s Alexa. Additionally, the Squirrel sends alerts if it tracks a student using problematic words or phrases like “I don’t want to be here,” and, by monitoring students’ language, it also generates retention risk profiles of students.

Juniors and seniors at the college, however, claim that they don’t like the Squirrel and resist its pings and nudges.

Delivery bots, virtual “friend” apps, and student retention bots are all just one side of anthropomorphized AIs in the pandemic. Amazon’s use of robots for warehousing jobs brings another, less privileged relationship to the fore as workers are driven with high productivity quotas.

Hating robots, as happened with the hitchhiking robot HitchBOT that was destroyed in Philadelphia, has old roots in Luddism and science fiction. Yet we wealthy consumers seem unwilling to give up our comfort and amenities, supply chain issues or not; the robots some love to hate make those prices so attractive.

As ethicists have long noted, how societies treat their minorities is the true test of those societies’ ethics and values. Although more research needs to be conducted, robot abuse is an ethical slippery slope. Normalizing cruelty to robots (à la Westworld, to take an extreme example) could lead to more violence and mistreatment of humans and other living creatures.

Animal protection laws have proliferated in the West; is it time to consider robots as something other than private property? Perhaps doing so could allow us to imagine and shape just futures with respect and care for all life forms.

Learn more in this related title from De Gruyter:

“Animals, Machines, and AI: On Human and Non-Human Emotions in Modern German Cultural History” (edited by Erika Quinn & Holly Yanacek)

You are currently viewing a placeholder content from YouTube. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

[Title image by sompong_tom/iStock/Getty Images Plus